Object Recognition in Point Clouds Using the 3D CAD Object Models

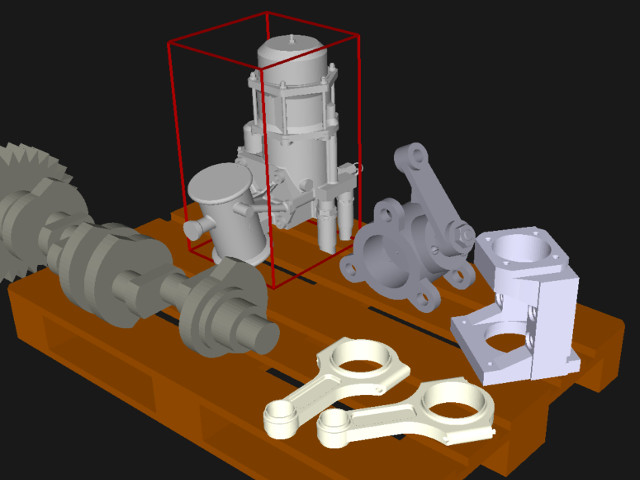

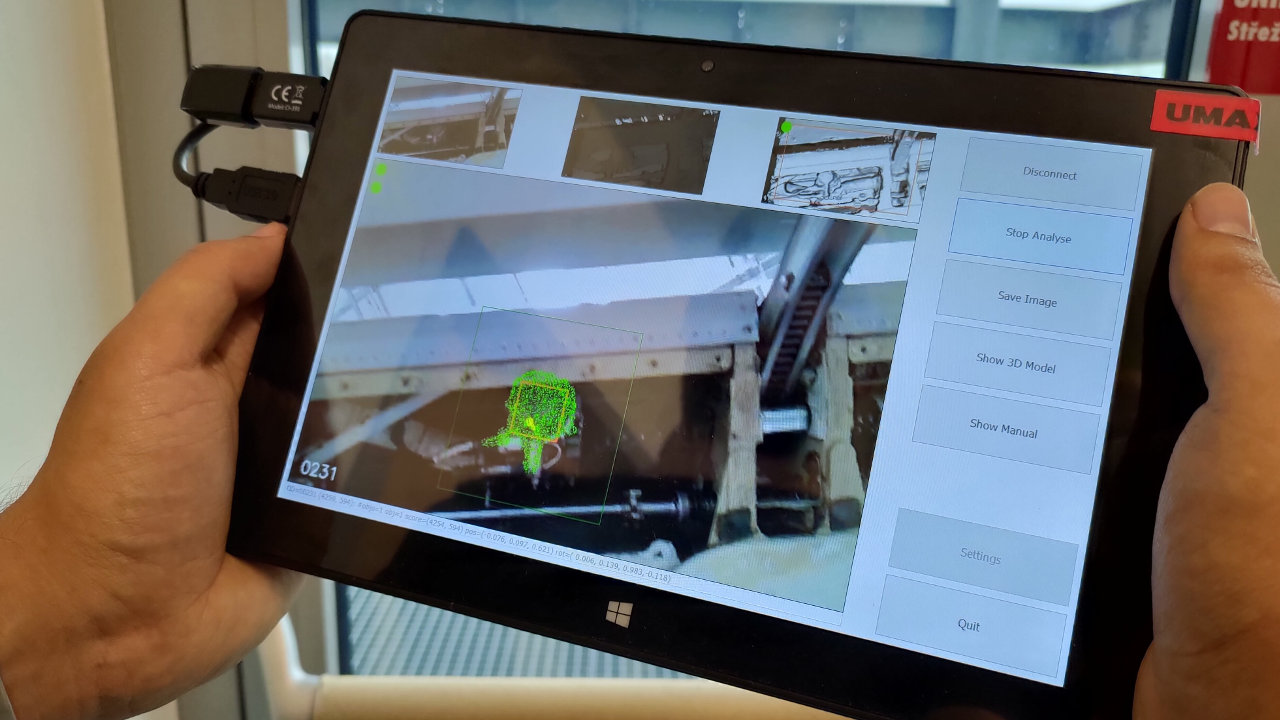

The goal was to develop the software for recognising 3D objects described by their CAD shape models (obj, stl, or ply files) in the scenes that are captured by affordable depth sensors (e.g. RealSense from Intel). Recognising the objects described in this way (and determining their pose) may be useful for various purposes, e.g. for augmented reality and robotic applications. The software should be capable to work in the real-life scenarios.

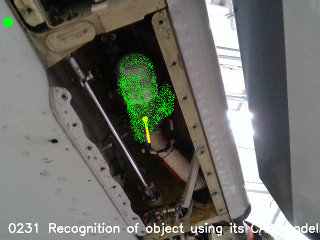

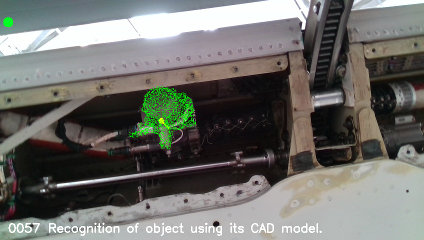

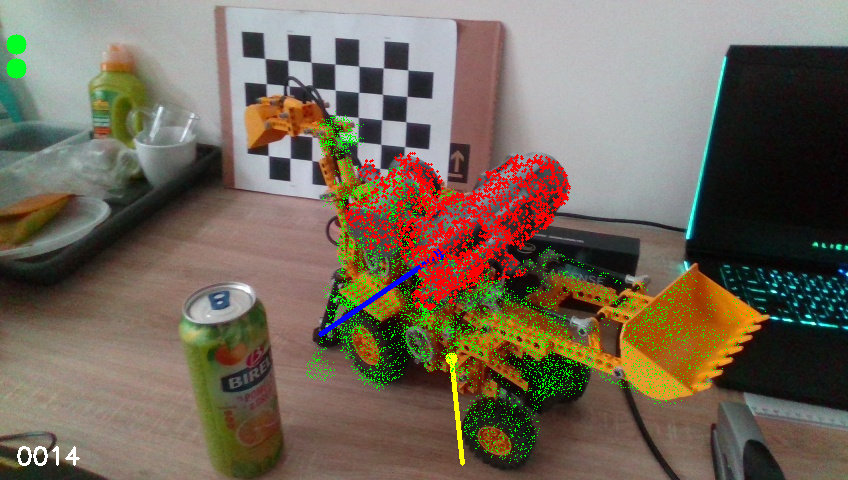

The algorithm can be outlined as follows. (1) The depth maps obtained from the sensor are integrated to obtain a filtered map or even a partial 3D model of scene. (2) The key points and their descriptors are computed, and the descriptors are compressed to their optimal size. (3) The correspondences between the key points of the scene and the key points of particular models are found and filtered. (4) On the basis of correspondences, a certain number of candidate objects are determined together with their positions. (5) The candidates are verified, and the final selection is carried out. From a very general point of view, the mentioned sequence of steps might be regarded as a state-of-the-art technique for solving the problem. However, many theoretical and practical problems have had to be solved to make the recogniser work properly for the real-life scenes and models, and in real-time. CUDA parallelisation has been massively used. A thin client for in-hand use, WiFi connected to a bigger computer, has been developed too.

Video Samples